First

|

back |

next |

Last

winiwarter@bordalierinstitute.com

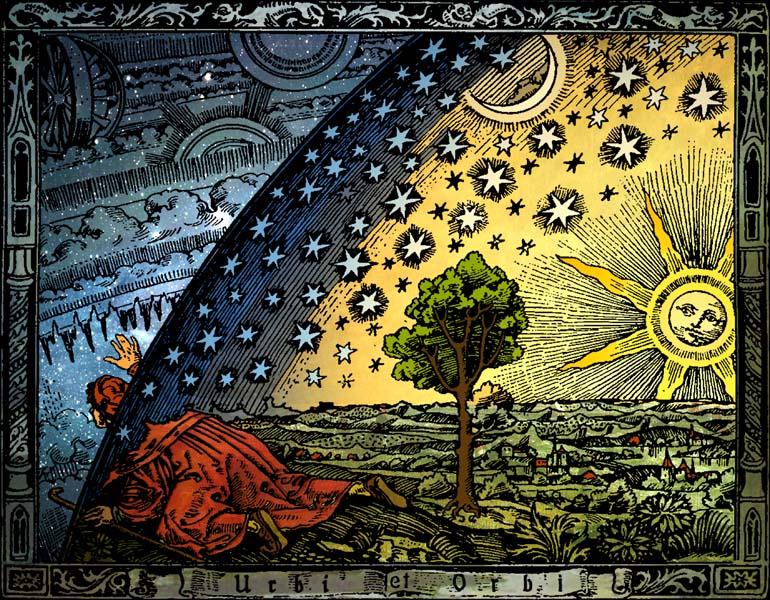

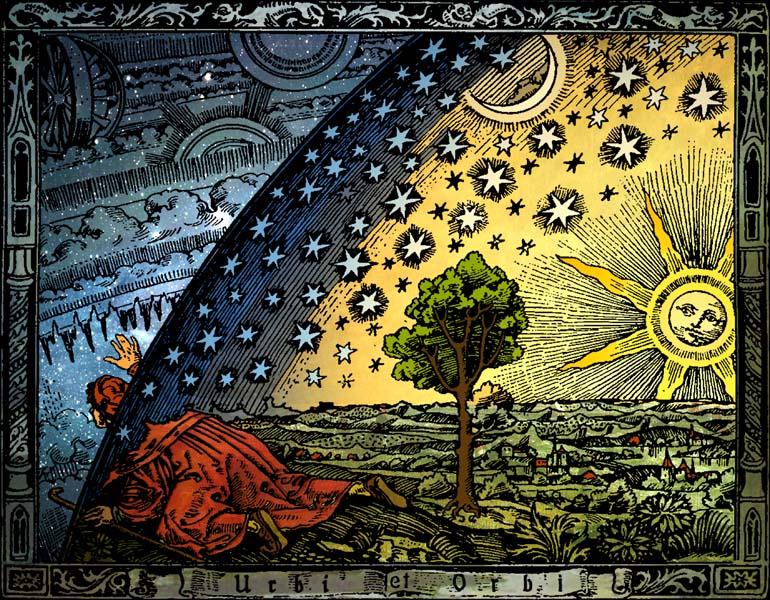

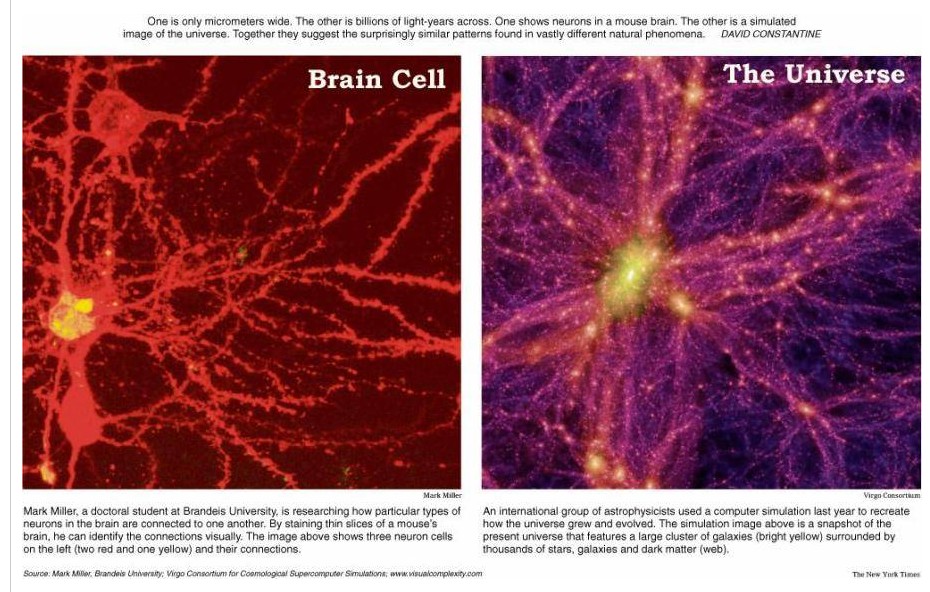

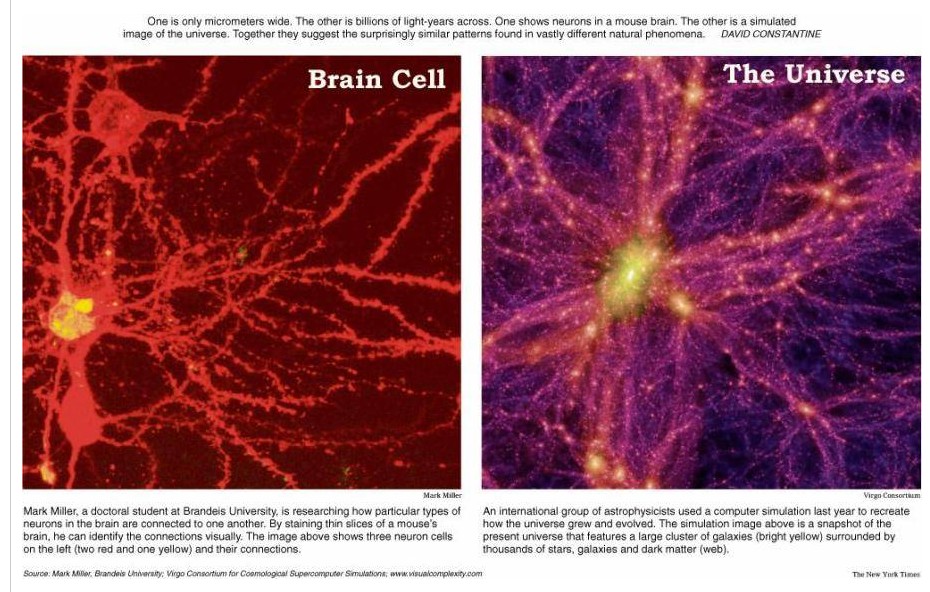

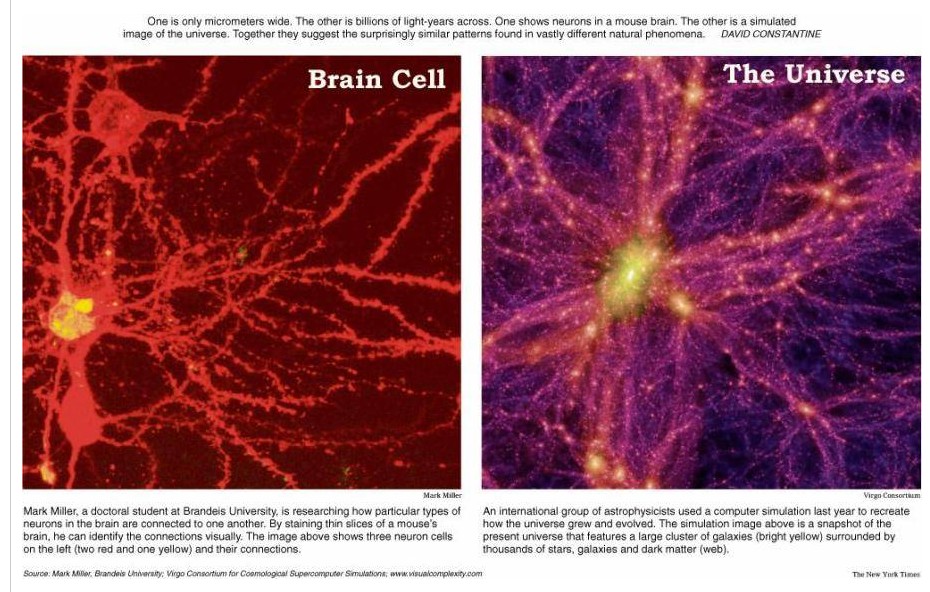

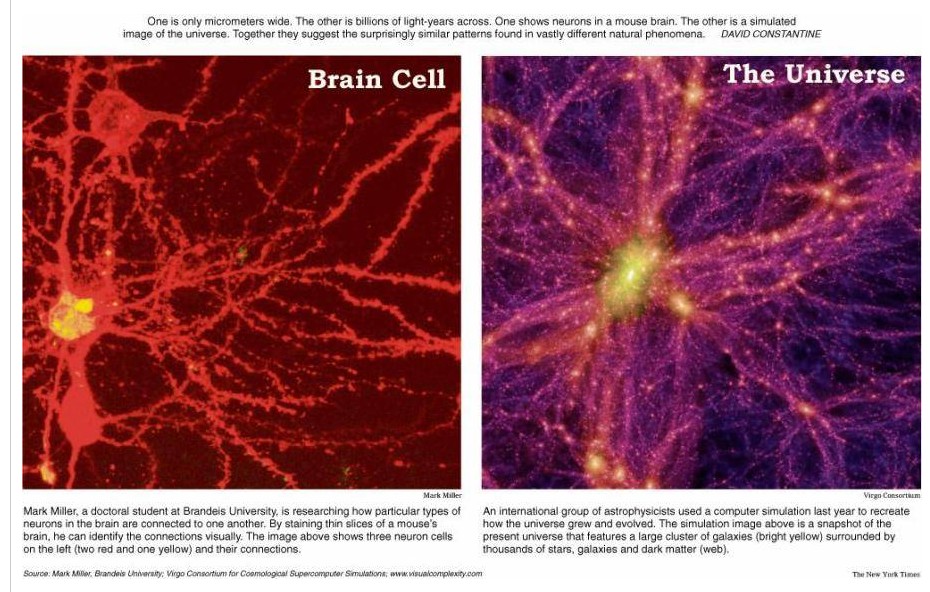

"The discovery of the Universe is the discovery of our Brain"

Peter Winiwarter

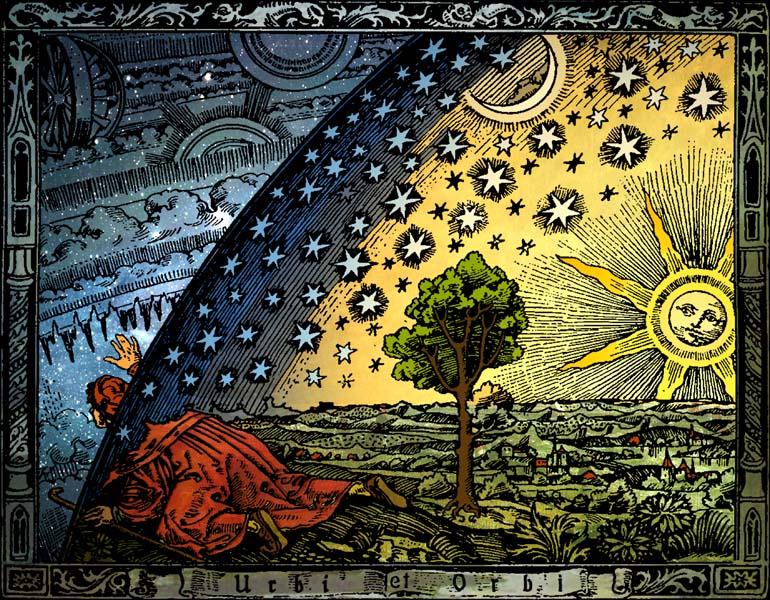

"I think the next century (21st) will be the century of complexity."

Stephen Hawking

note:

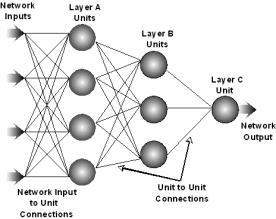

computer simulation of the evolution of complex systems/networks with

neural networks

(universal mappers)

is a young paradigme, which does not fit in any University or

"classical" research department.

Let's wait for the retirement of pure reductionist scientists and see

for the arrival of a new generation

of transdisciplinary holistic and open system

approaches like

the Santa Fe

Institute , naturalgenesis.net

and the evo

devo universe commmunity

"New

paradigms in science spread not because the tenants of the old

paradigme are convinced by the new ideas, but because the tenants of

the old paradigme die."

Max Planck

on Quantum physics vs.

classical physics.

"We

are all agreed that your theory is crazy.

The question which divides us is whether it is crazy enough to have a

chance of being correct. My own feeling is that is not crazy enough.

"

Niels

Bohr

on Pauli's theory of elementary particles

from Arne A. Wyller's The Planetary Mind

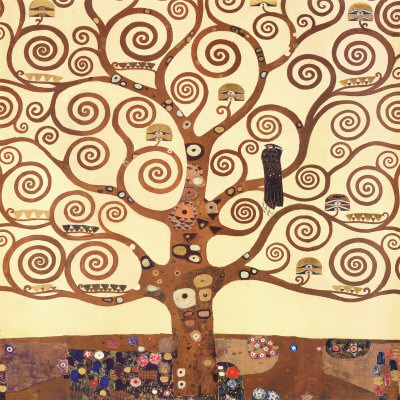

"Life

and mind have a common abstract pattern or set of basic organizational

properties. The functional properties characteristic of mind are an

enriched version of the functional properties that are fundamental to

life in general. Mind is literally life-like. "

Godfrey-Smith, P. (1996). Complexity and the

Function of Mind in Nature. Cambridge: Cambridge University Press.

"Mind is literally life-like. The Universe and Life are literally mind-like. "

Peter Winiwarter (2008). Network Nature. www.bordalierinstitute.com

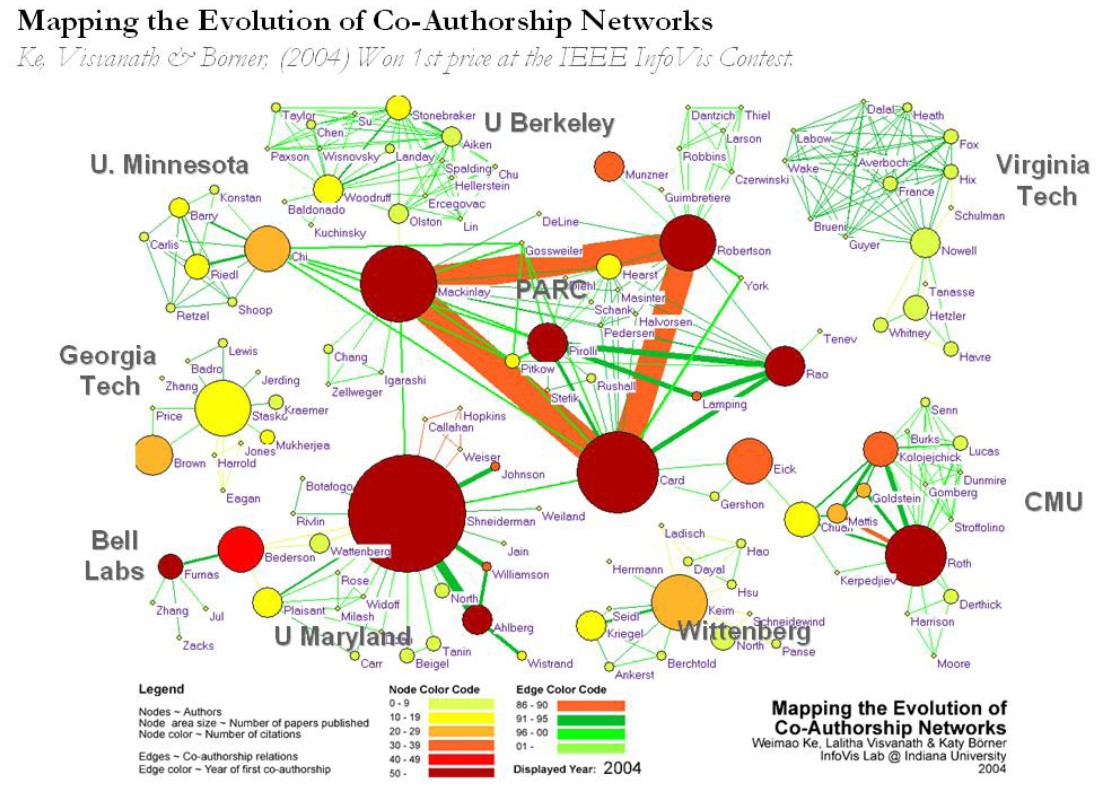

Sergej Dorogovtsev

Summary:

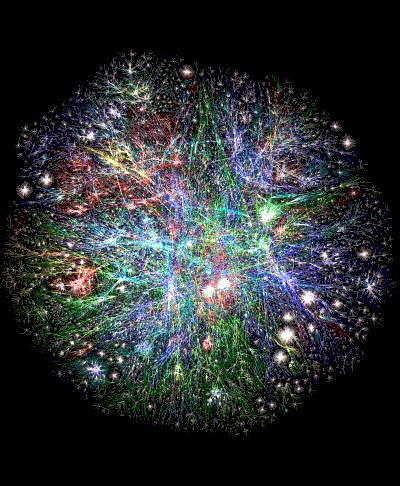

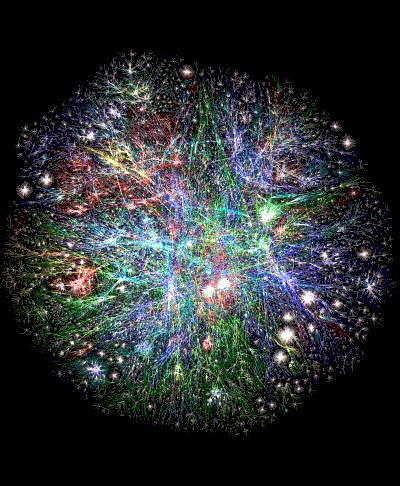

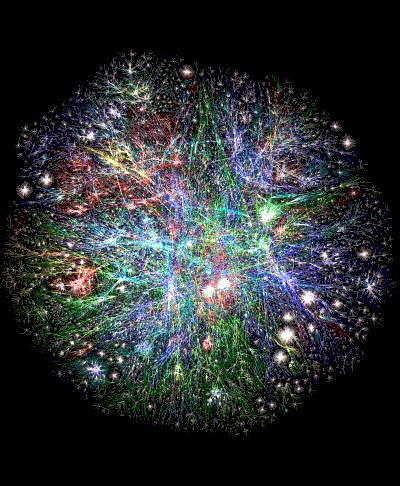

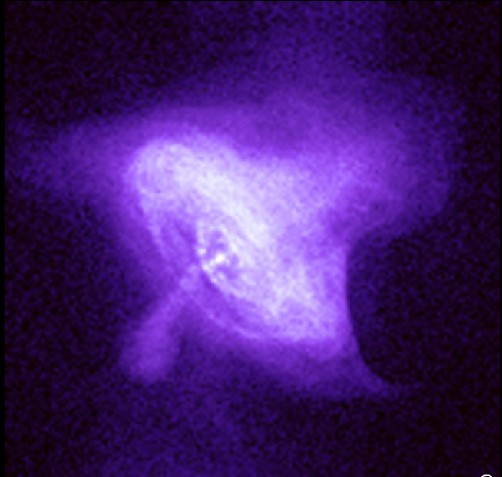

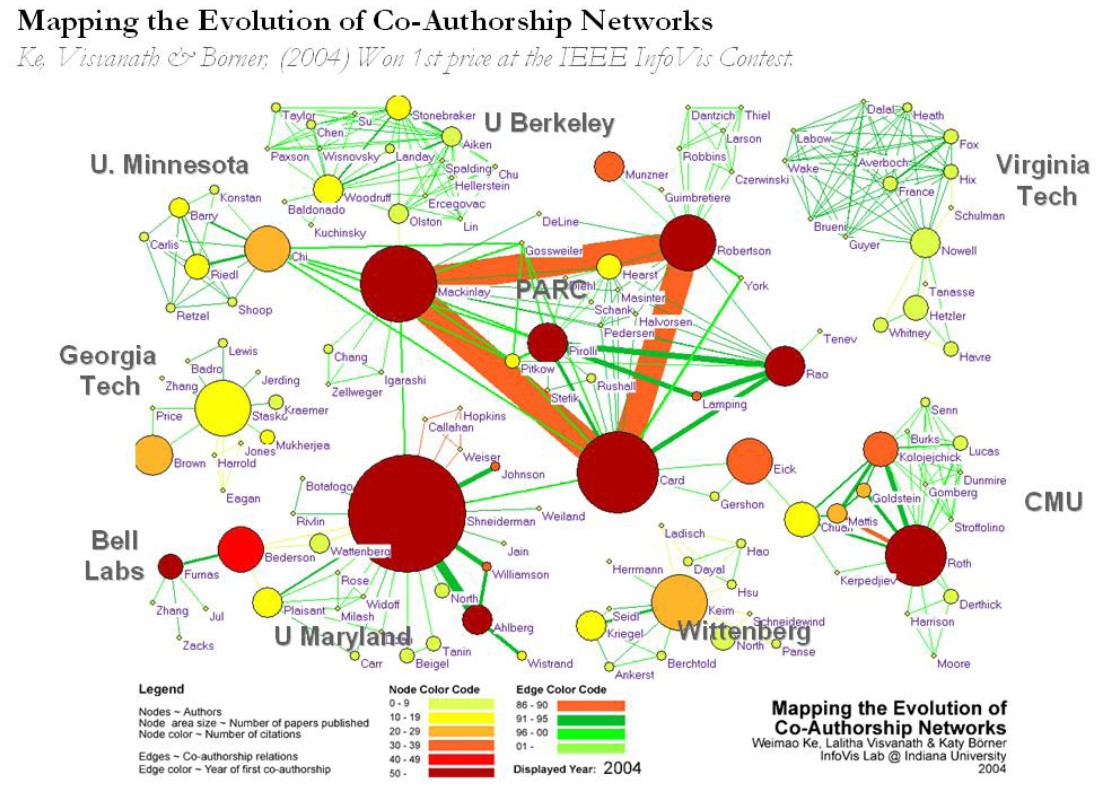

Complex Systems, Neural Networks & Cosmic Evolution

The

Intelligence of Networks & the Networks of Intelligence,

the

universe : a fractal hierarchy of intelligent neural networks

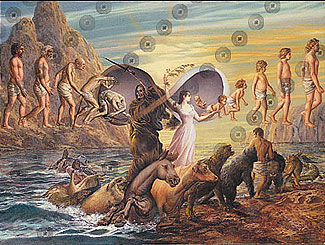

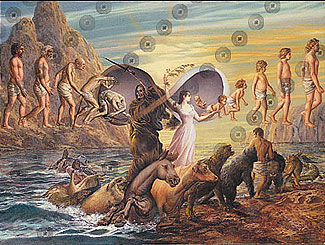

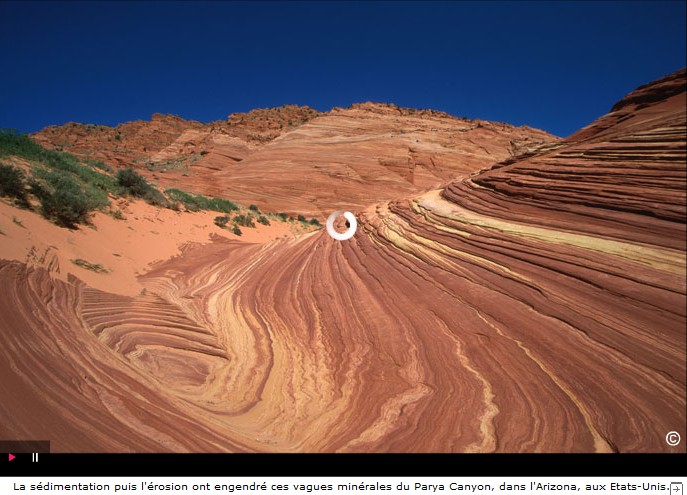

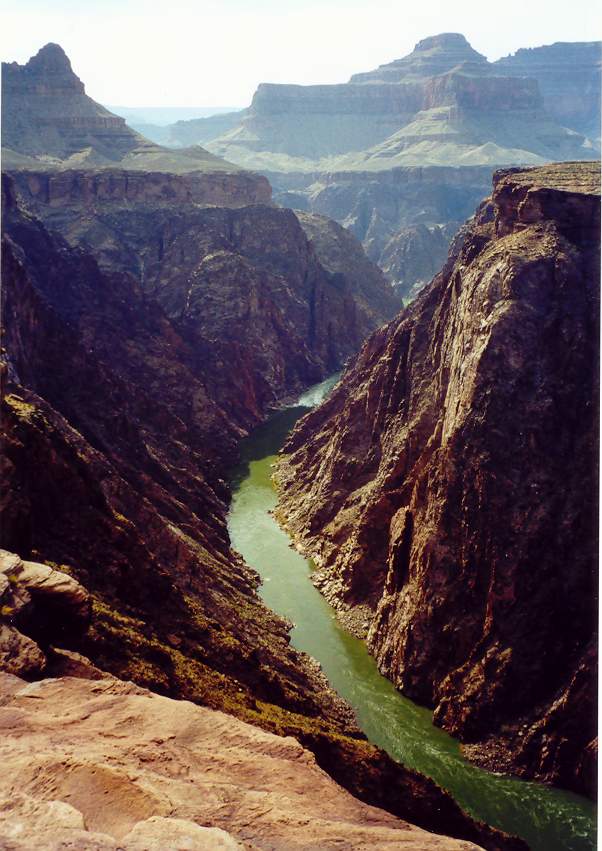

all networks of evolution

from the big bang to the world wide web revealing

Pareto-Zipf-Mandelbrot (parabolic fractal) powerlaws are:

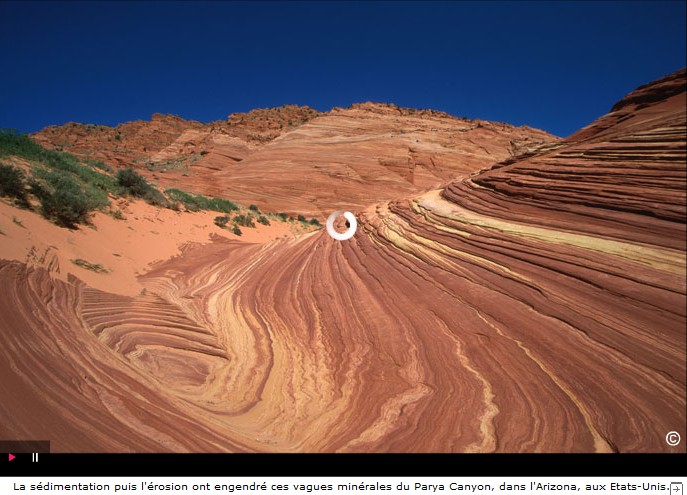

- energy/information transformation systems

- built of basic energy/information

transformation processors that are born and run to death in

an

irreversible way (birth

and death processors).

- all processors are linked in a complex "small

world" network mappable on a multilayer perceptron

network of artificial neurons.

- it's the global field generated by all

processors that

"drives" the process of evolution based on energy optimization specific

to the level of evolution :

GUT, gravitation, strong nuclear, weak

nuclear, elmag, chemical, geothermal, wind, water, fire, genetic code,

spoken words, written codes, computer codes, Web services ...

- ritualization : repetitive use of pathways, Hebb's rule* ( cells that fire together wire together) and the Pareto frontier (You can't make many people better of without making many people worse of)

hardwire the networks

information flow into "engrams"**, like timetables hardwire a railroad,

or air

transportation network.

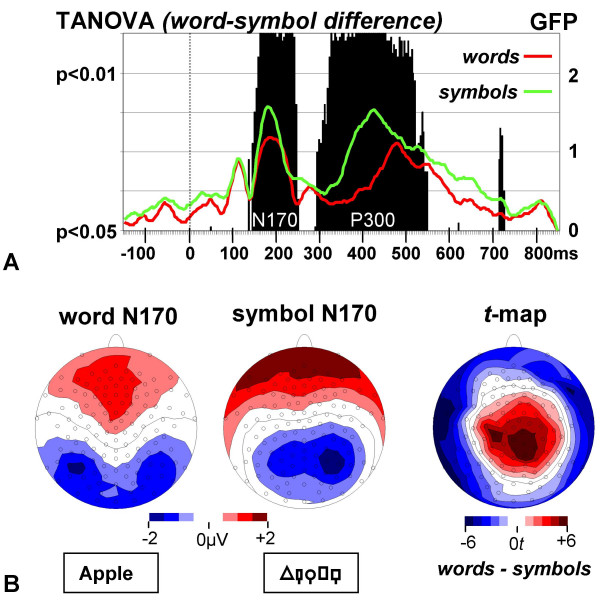

- *Hebbian theory has been the primary basis for the

conventional view

that when analyzed from a holistic level, engrams are neuronal nets or neural

networks.

- ** "If the inputs to a system cause the same

pattern of

activity to

occur repeatedly, the set of active elements constituting that pattern

will become increasingly strongly interassociated. That is, each

element will tend to turn on every other element and (with negative

weights) to turn off the elements that do not form part of the pattern.

To put it another way, the pattern as a whole will become

'auto-associated'. We may call a learned (auto-associated) pattern an engram."

(Op cit, p44;) Gordon Allport

Lotka said,"The

principle of natural selection reveals itself as

capable of

yielding information which the

first and second laws of thermodynamics

are not competent

to furnish. The two fundamental laws of

thermodynamics are, of course, insufficient to determine the course of

events in a physical system. They tell us

that certain things cannot

happen, but they do not tell us what does happen."

1) First

law of genesis : the complexity

of a self-organized system/network can

only increase or remain constant

(irreversibility

of evolution, the arrow of internal system time).

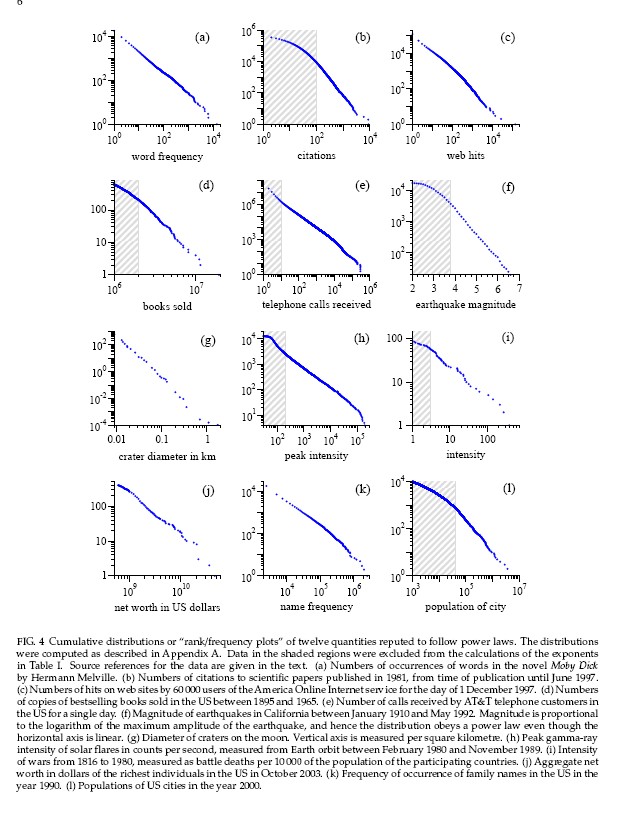

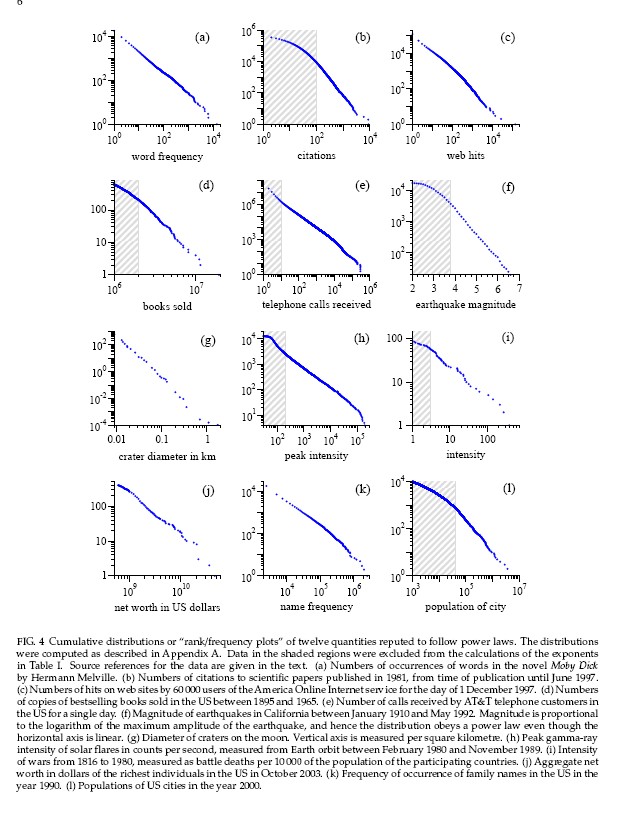

2) Second

law of genesis:

for self-organized

systems/networks we observe

element/symptom size distributions

of the longtailed Pareto-Zipf-Mandelbrot

(PZM)

type

(parabolic

fractal 'generalized

life

symptoms').

3) Like Gaussian probability distributions, longtailed

Pareto-Zipf

distributions are

stable

under stochastic addition (mergers

and splits of systems/networks); Gauss + Gauss yields Gauss;

Pareto-Zipf + Pareto-Zipf yields Pareto-Zipf .

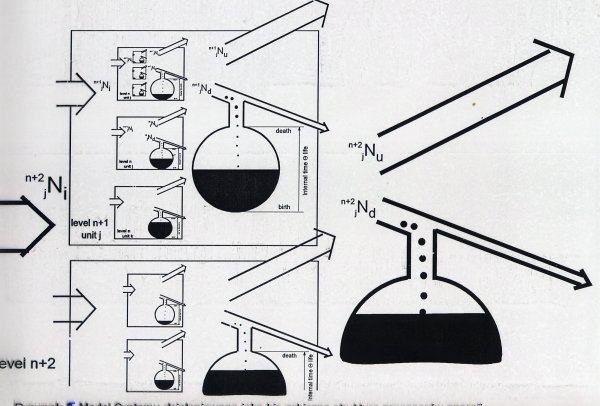

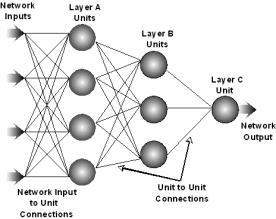

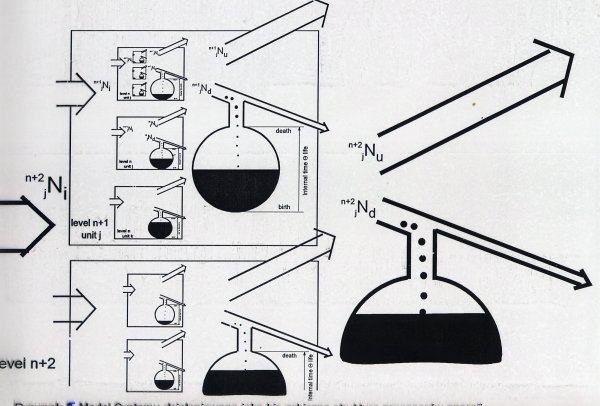

4) Self-organized systems/networks can be mapped on a hierarchy of

binary threshold automata (birth

& death processors) modelled by a

Neural

Network of the type multilayer

feed forward topology "learning"

through gradient descent backpropagation

of errors through consecutive "runs" - cycles of energy information

transformation (multilayer

perceptron).

(energy information equivalence)

5) Self-organized

systems/networks have memory *.

(galaxies, ecosystems, world economy, world wide web) : the memory consists of the global

system

topology of the network , local

weights and connection strengths of all processors.

* ability to store, retain, and subsequently retrieve information

6) Self-organized

systems/networks are learning *.

Hebb's rule reinforces memory through consecutive runs of energy / information transformation cycles.

* ability to improve the facility to store, retain, and subsequently retrieve perceived information (memory)

7) Self-organized

systems/networks are intelligent *.

global error minimization on the

system level ( total system

"cost" of web) through consecutive runs of energy /

information

transformation through the system hierachy.

* ability to optimize learning as a function of a goal (maximum or minimum principle)

8) Evolution

is Self-organization of networks of matter

and mind

from

astrophysical

to linguistic and computer networks of the world wide web

following the same seven conceptual categories in conceptual space

In

short, the properties of

artificial neural networks of the multilayer perceptron type are

common to all systems / networks for which we observe PZM

(Pareto-Zipf-Mandelbrot, parabolic fractal) distributions:

Hence:

- selforganized

systems / small world

networks / the universe(s) have memory

(engrams : topology of tree structure, hypercycle structure)

- selforganized

systems / small

world networks / the universe(s) are learning

(Hebb's rule, Pareto frontier)

- selforganized

systems / small

world networks / the universe(s) are intelligent

(global energy/information optimization)

the universe: a hierarchy of neural networks

Network

Nature has memory

Network Nature

is learning

Network Nature is intelligent